An immersive experience that makes you feel as if you are there Sound” technology to realize an immersive communication experience as if you were in the same place KDDI Research Institute’s “360-degree video playback application for mobile devices

6/24/25

“The World in Our Minds 2024: Ultra-Diversity,” organized by B Lab, showcases unique research findings from various companies, universities, and institutions striving to realize a neurodiverse society. KDDI Research exhibited an application themed around “sound” that allows users to experience spatial audio from specific areas in their surroundings, synchronized with 360-degree video.

Nanako Ishido (▲Photo 9▲), Director of B Lab and promoter of “The World in Our Minds” exhibition, interviewed Toshiharu Horiuchi (▲Photo 1▲) and Suguru Yachi (▲Photo 2▲) from KDDI Research. They discussed the background of their R&D efforts, the details of their 360-degree video playback app for mobile devices, and their latest research findings.

<MEMBER>

Toshiharu Horiuchi XR Division, Advanced Technology Laboratories, KDDI Research, Inc. (Concurrent position: Advanced Technology Research Research & Development Division, Technology Strategy & Planning Headquarters, KDDI CORPORATION)

Suguru Yachi Advanced Technology Research Research & Development Division, Technology Strategy & Planning Headquarters, KDDI CORPORATION (Concurrent position: XR Division, Advanced Technology Laboratories, KDDI Research, Inc.)

Louder as you get closer, quieter as you move away

Experience sound “movement” in a 360-degree video.

Ishido: KDDI Research Institute has been invited to participate in the “Brain World for Everyone” exhibition to be held in 2024. The theme of the exhibition is “Sound”. Could you describe what the exhibition was about and your latest research?

Mr. Horiuchi: KDDI R&D Laboratories exhibited the “360-degree video playback application for mobile phones” that we have been developing under the theme of “Feel the scenery you want to see with sound. The reason is that we wondered if it would be possible to have a sense of immersion when communicating with someone in a remote location, or if it would be possible to have a conversation on the phone or cell phone with a sense of intimacy. In order to enhance the experience of immersive and intimate communication, we are conducting multimodal research on appearance, sound, and touch.

Let me explain what we exhibited, our technology and innovations, and the background behind our efforts. 360-degree videos are increasingly being viewed on YouTube, etc. 360-degree videos are live-action videos that allow viewers to look in any direction they wish, in other words, to experience movement. It is expected to be used in a variety of areas, including education, medicine, entertainment, and remote communication.

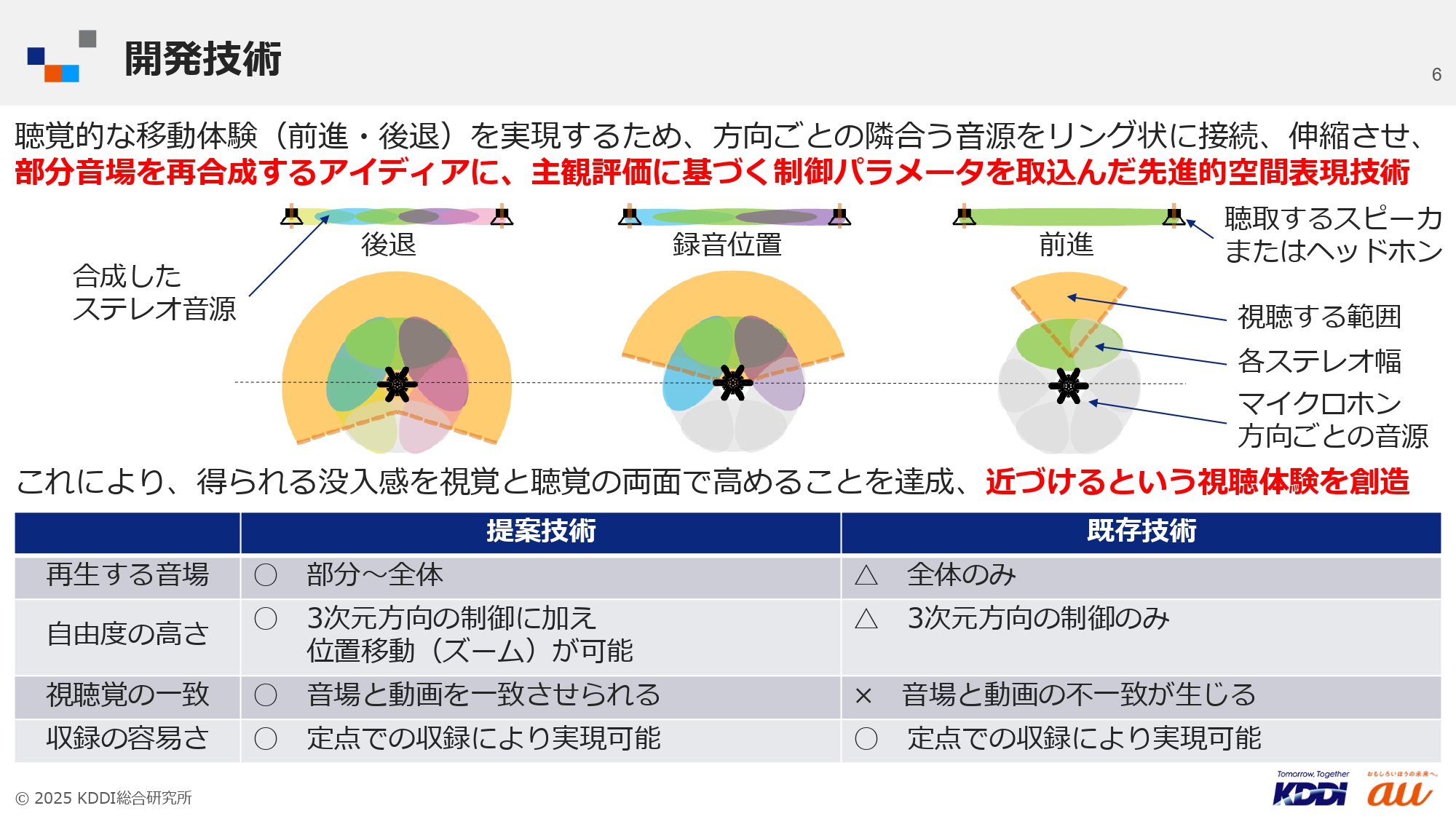

However, while visually the movement experience was possible, the sound was not consistent with the “direction and range of sound seen” because of the spread of the sound. Auditory movement experiences were not being created. The effect of sound was insufficient, and the background of our development efforts was to enhance the immersive experience in both the visual and auditory sense. Considering the further development of communication through the five senses in the future, we thought that acoustic technology that allows the user to hear sounds in the direction and range they see would be the key to immersive, realistic, and intimate communication.

Therefore, KDDI R&D Laboratories has developed a system that allows you to experience movement visually, and at the same time, to hear sounds approaching and moving away from you aurally.

We developed and exhibited an application that allows visitors to experience (▲Picture 3▲)

I will explain exactly what kind of application it is by playing back a video of the Tokyo Philharmonic Chorus and Mr. Ryota Fujimaki, who cooperated with us. A camera and microphone were placed at Mr. Fujimaki’s place in the center of the stage to capture 360-degree images of the surroundings and record sound. When this video is played back on the application, for example, when you approach or move away from the female chorus on the left side of the stage or the male chorus on the right side, or when you zoom out, you can enjoy not only the visual effect but also the sound effect at the same time. It is also possible to cut out the sound of one part of the sound to the center or to experience the whole sound. (▲Picture 4▲)

In this technique, sound is recorded in surround sound. Usually, the sound effect is that of ‘where the sound was recorded’. Therefore, we use an unusual way of creating sound that is equivalent to the sound of going forward or backward, approaching or moving away. This way of creating sound is a spatial reproduction technology that incorporates subjective control methods, incorporating “how it sounds when people hear it” into the technology. Through such efforts, we have made it possible to experience movement both visually and aurally. (▲Picture 5▲)

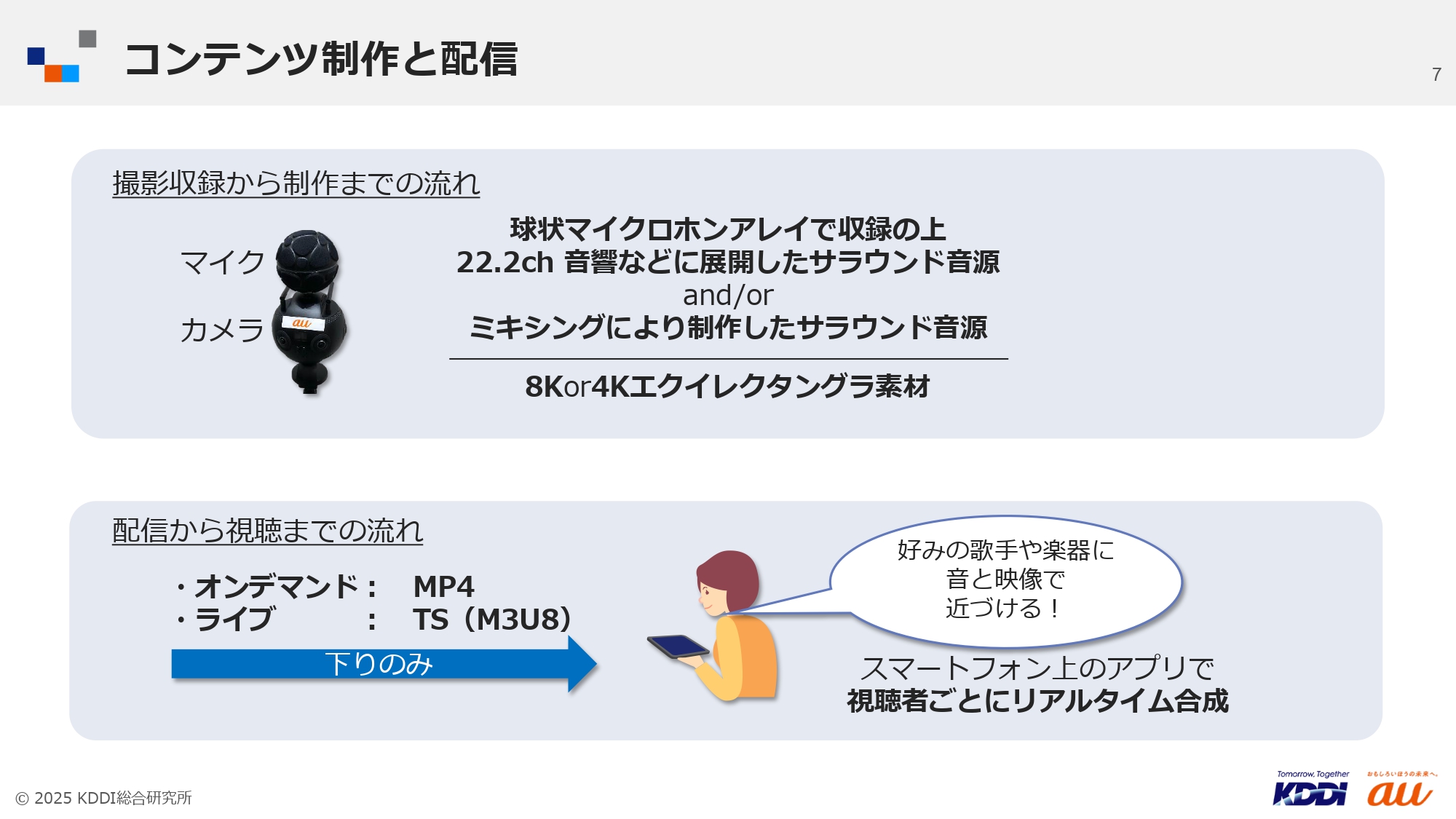

We have also devised ways to simplify the production and distribution of content. Specifically, we used a regular VR camera and a spherical microphone array with a microphone on top and a camera on the bottom to capture the entire space at once and distribute the material in its entirety. The user receives the material, plays it back on a smartphone, and simply manipulates it at will to view any part of the space and hear the sounds at that location. (▲Picture 6▲)

From actual use in the field of music, such as idol groups and choirs

to various fields such as education, art, and medicine in the future.

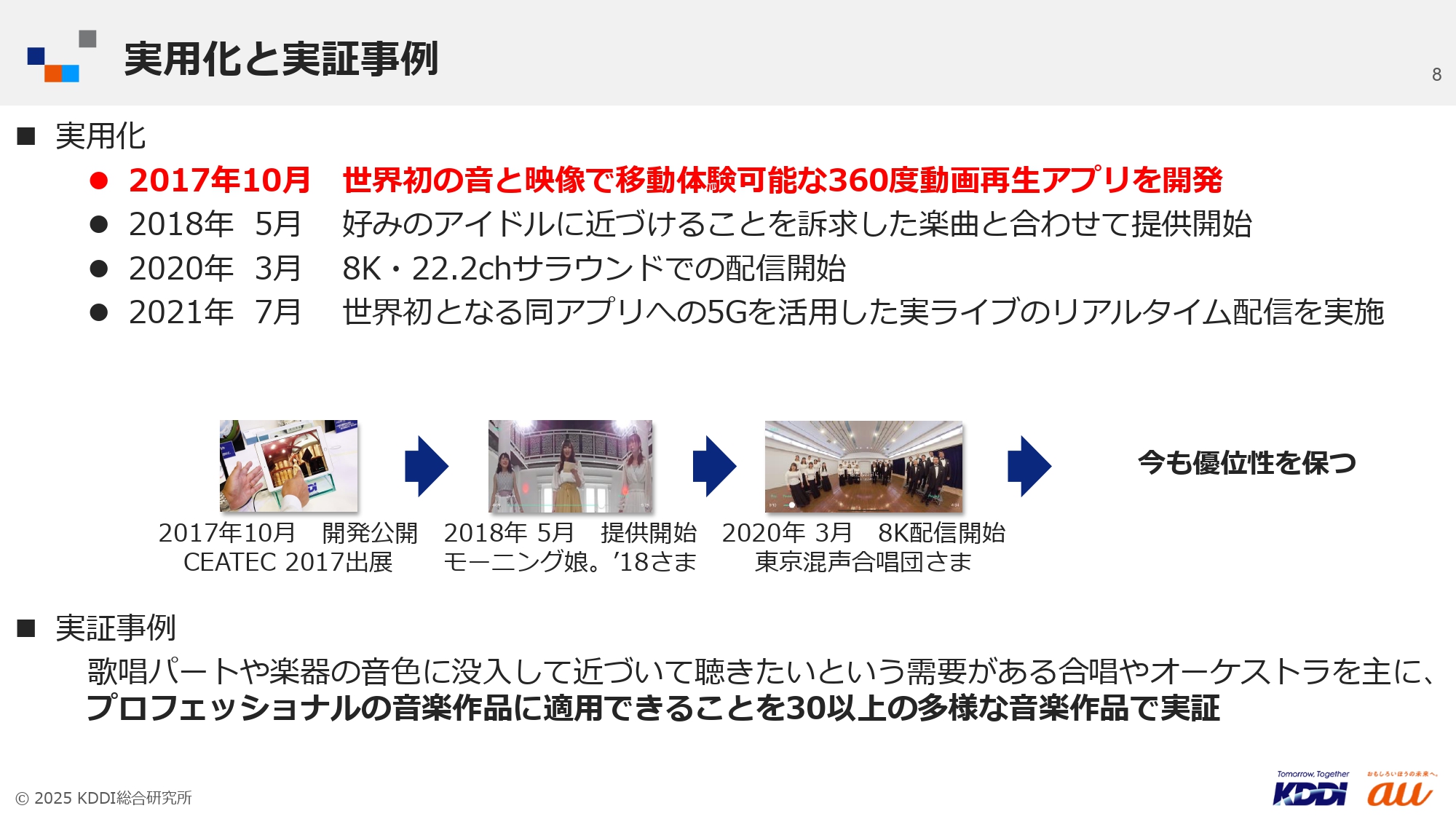

KDDI R&D Laboratories began this research in October 2017. As the world’s first 360-degree video playback application that allows users to experience movement not only through images but also through sound, it was provided to an idol group and used by the Tokyo Mixed Chorus as a potential teaching tool for part-based practice. Currently, it is also being used by various other music groups, including J-POP. (▲Picture 7▲)

Since then, when music events could not be held at the Corona Disaster, the application was used by various groups to provide an “immersive theater-like experience” or a “unique virtual experience,” even though actual performances could not be held. We believe that VR technology can also contribute to a certain extent to the improvement and transmission of skills in chorus practice and music ensemble practice.

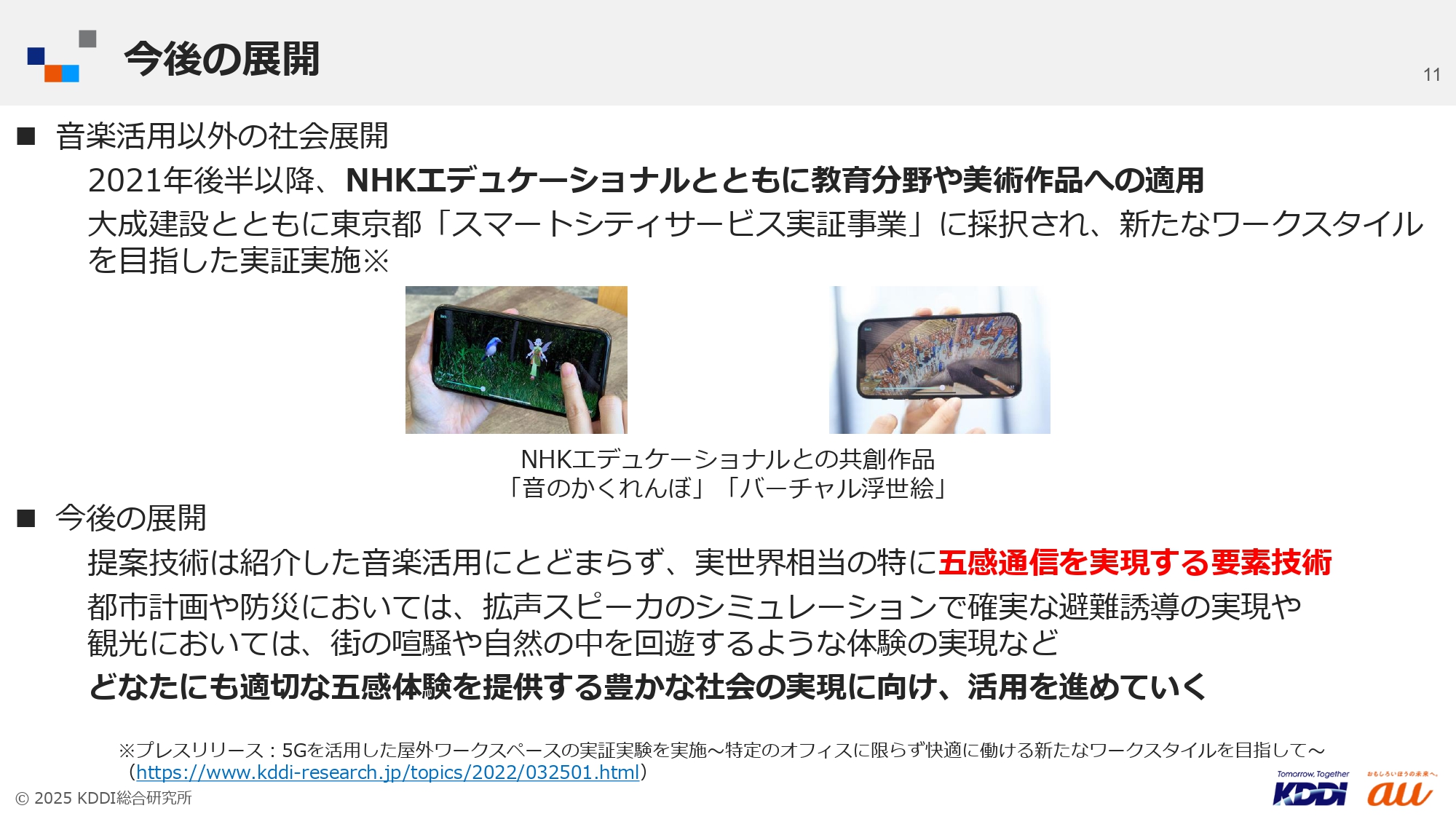

So far, the system has been used mainly for music, but from the latter half of 2021, we are promoting its application in the field of education and art works. (▲Picture 8▲)

Also, for example, in teleconferences, there are times when people say, “I want to hear this speaker’s voice better. We are also considering the possibility of new uses in response to changes in work styles, such as telework.

In this way, KDDI R&D Laboratories hopes to realize “five-sense communication. In the field of urban planning and disaster prevention, this technology could also be used to verify “where to place speakers and how they sound” during evacuation guidance. In addition, this technology can be used for virtual travel and to reproduce actual local sounds when walking through a city created by digital twinning. Based on this technology, we would like to work toward the realization of an affluent society in which everyone can have an appropriate sensory experience.

By controlling audible sounds

I want to create a “comfortable space” for each and every one of us.

Following Mr. Horiuchi’s explanation, Nanako Ishido, Director of B Lab, asked about the latest research results on 360-degree video playback applications for mobile devices, efforts to realize a neuro-diverse society, and future prospects.

Ishido: With video, the technology has advanced to the point where users can change their viewpoints, zoom in, and engage proactively and actively, and I thought it was very interesting that it would be possible to do this with sound as well. Mr. Horiuchi’s explanation showed that the technology to enable users to hear the sounds they want to hear has been implemented. What kind of technology would go further and allow the user to more actively control the sound, for example, by changing the volume?

Mr. Horiuchi: The world we live in is too full of sound. Therefore, I think that “technology to change the balance of sound” is conceivable. This technology could be used to lower the volume of sounds we don’t want to hear and increase the volume of sounds we do want to hear, or to allow elderly people who have difficulty hearing high-frequency sounds to control them.

Ishido: The reason I asked you to exhibit at “Brain World for Everyone” is because I want to convey the potential of exactly such technology to many people. For example, at parties, people often listen to the voices of the people in front of them, but some people with ASD hear all the sounds the same way and have trouble concentrating on the voice of the person in front of them. In such cases, I thought that this kind of technology could be used as a way to adjust which sounds to concentrate on and which to listen to the conversation. There is such a possibility, isn’t there?

Mr. Horiuchi: There is. I think that general hearing aids are made with a mechanism that targets the person in front of you and picks up their voice and sounds, but I believe that in the future, they will be tuned deeper to suit each person. Specifically, I believe that technology will be needed to distinguish sounds that people have difficulty with and make them easier to hear.

Ishido: Some of you may have wondered where the technology related to sound is related to “Brain World for Everyone” and neurodiversity. I believe that this technology is useful in creating an environment that delivers sounds that can be heard appropriately by each individual. In fact, when I asked a psychiatrist about it, he said, “It’s very interesting, very interesting, and I would love to try it out in the field. I think it would be good if we could work on it through joint research.

What is the actual response from users? For example, in the case of the idol group I mentioned earlier, I think that wanting to hear only the voices of your “guesses” is an easy way to use the system. What do you think of the actual users, what kind of usage are they impressed with, and what kind of usage do you think most people use?

Mr. Horiuchi: Since idol groups are composed of multiple members, the “guesses” for the fans, or users of this application, are all different. For fans, their “guesses” may not be in the center of the completely packaged content, so many of them enjoy listening to the content with their “guesses” always in the center, They were very pleased. On the other hand, idol group members said that they were pleased to be able to be the center, even if only virtually, and to have their fans listen to them in that state.

When we have used it with choirs, we have heard people say that it is not natural to listen to it as a whole piece of music when the viewer has to move closer or further away from the chorus. I have also heard composers say that they will have to compose their music with such a way of being heard and enjoyed in mind. Depending on the score, certain instruments may only sound in a small space, but I was impressed to hear that this would bore the audience and may also change the composition itself.

Ishido: I see. So the way of expression on the part of the performers will also change. In the same way that technology creates new expressions or changes expressions, for example, one person in an idol group may become more conscious of performing as if he or she were in the center of the group. I felt that the performers change and the composers also change, which is an opportunity to create new music and new performances. In fact, that is the kind of voice you are hearing.

Mr. Horiuchi: Yes. There were from composers, and we were also approached by choral groups.

Ishido: I would very much like to see this happen. It will be possible to listen to music in a way that is tailored to the individual, and new expressions will be created based on the premise that individual listening styles will change. There were many examples of music, but you mentioned that the scope of application is expanding. I remember that there were also applications in art works and ukiyo-e prints. Can you tell us about other applications?

Mr. Horiuchi: In addition to educational and art applications, we are now in the process of research and development for use in sports. It has already been used in figure skating and road biking.

Ishido: What happens when you introduce it in figure skating?

Mr. Horiuchi: Most figure skating video content is viewed from the outside, with spectator seating around the rink, but VR is 360 degrees, so viewers can place their perspective in the center of the skating rink. Viewers will be able to experience the figure skaters’ skating up close and personal. I have an image that this will not only provide an immersive skating experience, but also possibly change the way scoring is done. I think that the way we look at figure skating may change as well, as we look at the outside from the inside.

In addition, since audio is attenuated by distance, for example, the sound of ice skating has dropped to the point where it is inaudible from the spectator seats. Our goal is to record the sound from the center of the skating rink and provide it to the audience.

Ishido: If this happens, we will be able to experience a variety of things more with video than with live viewing, and it is possible that it will go beyond real viewing. If the sense of touch is also transferred, as you mentioned earlier, we may be able to appreciate the realistic visual experience, whether it is sound, image, or touch, with more reality than if we are watching from a distance with binoculars at a game.

Mr. Horiuchi: I really believe that this will happen, and I am working on it, but I also believe that it can happen at the same time. It could be like mixed reality, where people can enjoy real and virtual experiences at the same time in concert halls and sports venues. I know there are people who look through binoculars at concerts, but by being able to enjoy them with smartphones and AR glasses at the same time, the content will have more added value. Watching it at home will be a wonderful experience, and if you are there, I believe that you will be able to have an experience that goes beyond what has been real so far, instead of staying there.

Ishido: I think the story of virtual travel is also very interesting. It is true that there is such a thing as virtual travel through video, but it is also possible to reproduce that, for example, when you approach a place you have chosen, you can hear the conversations of the people in the local stores more prominently.

Mr. Horiuchi: One of the reasons I enjoy walking through the city is that the sounds coming from the various stores and the voices calling out to me are constantly changing. We are developing technology in a direction whereby sounds and images are provided in response to one’s movements and needs, rather than simply being presented as images when one actively moves.

Ishido: You mentioned earlier that anyone with a VR camera can make one, but will ordinary users be able to make simple ones in the future, including new sound experiences such as this?

Mr. Horiuchi: It may take some time, but I think we will be able to make it. small VR cameras are available, and some are easy to use. However, many customers are using them for video, but for sound, you have to use a slightly special type of microphone. If you want to guarantee a certain level of quality, there are not many compact microphones available yet, so I think it will take some more time in terms of equipment procurement. However, as long as the equipment is available, I think it will soon be possible for customers to take their own pictures and upload them.

Ishido: Is the cost of the equipment the only point that poses a bit of a hurdle for the general public?

Mr. Horiuchi: Basically, I think it is the cost aspect and the compactness and size of the equipment. We also believe that if we can build and prepare a distribution platform, we will be able to do it.

Ishido: From the viewpoint of neurodiversity, school classes are sometimes delivered on-demand via video, and if this technology is used for recording, for example, only the teacher’s voice can be emphasized.

Mr. Horiuchi: I think we can already do this using VR and 360-degree video technology. Until recently, the equipment was expensive, but now it has become much less expensive, and I think we will have to find out how far we can go with software.

Ishido: I think this exhibition was triggered by the discussion about how we can freely change our viewpoints in video, but not in sound. Is there anything that KDDI R&D Laboratories would like to challenge in order to enrich the music experience, etc. in the future?

Mr. Yachi: In video, we have a video specialist who is working on this. Horiuchi specializes in acoustics and I specialize in tactile research. At the present stage, each of us is conducting research separately, and we are advancing in parallel. I am very interested to see how the synergistic effects will develop when they are experienced simultaneously as a multimodality experience in the future.

Ishido: I would love to know and experience what kind of experience can be had beyond the integration. In “Brain World for Everyone,” there is an exhibition section where people can experience the five senses as others feel them, using the power of technology to show how the five senses are different for each person. Among the five senses, there is very little research on taste and smell, which is a challenge for us. Are the transmission of taste and smell still a hurdle to overcome? As you say, Mr. Taniji, in order to “have people experience the senses in an integrated and more realistic way,” I think that researchers would be interested to see how you would tackle the senses of taste and smell as well.

Mr. Horiuchi: As you said, we are also very interested in taste and smell, and are now thinking about how to do it. Many research institutes are starting to work on this, and I think they are producing interesting results, but we are still struggling with how to bring this to the general public. We have a feeling that if we could do olfaction before taste, we would like to give it a try.

Ishido: I also have the sense that some interesting research results have emerged regarding the sense of smell. I think the general public would be happy if you could show us the integrated technological development and even the design of services. On the other hand, as an engineer, I am always thinking that if we had more technology like this, we would be able to realize a better world. Could you tell us about any areas of technology that you are looking forward to seeing, or any areas in which you expect this kind of technology to develop?

Mr. Horiuchi: From an acoustic point of view, the technology is related to speakers. In order to reproduce frequencies from low to high, a speaker of a certain size is necessary, and especially for low frequencies, a large speaker was necessary. Until now, the size of the speaker has limited the frequencies that can be reproduced, but if it becomes possible to reproduce low frequencies even with small speakers, the range of frequencies that can be reproduced will be expanded. I believe that if there are breakthroughs not only in size, but also in sound production methods, material technologies, and material technologies that are based on different principles from the past, the world will become a tremendous place.

Ishido: Materials is also a major research topic at the Graduate School of Media Design at Keio University, where I teach.

Mr. Yachi: We are working on generative AI technology in our daily work and have accumulated a lot of knowledge. I find it very interesting that it can process vast amounts of data beyond the amount of data that humans can be aware of. Currently, generative AI is capable of multimodal processing, combining text, voice, and images, for example. In the future, I am looking forward to seeing what will happen when information from other modalities, such as the five senses, is combined with generative AI technology.

Ishido: I am excited to hear what you have to say, and I am sure that a lifestyle called the “new normal,” which is completely different from what we have today, will be realized in the future. This interview is for the Neurodiversity Project, and I think there are many ways in which the technology of the 360-degree video playback application can provide support to those who, as minorities, have difficulties in their daily lives. I would like to end this interview by saying a few words about what you would like to do toward the realization of a neurodiversity society from this perspective.

For example, efforts to control physical space, such as the Quiet Hour, are emerging, but the beauty of digital technology is that it can create individually optimized spaces that are comfortable for people. In this regard, I felt that this technology could be used to create a comfortable environment for people when they walk in the city. What do you think?

Mr. Yachi: Noise-canceling earphones are becoming more and more popular. This is a technology that cancels out sounds that you do not want to hear. When I first learned about products incorporating this technology, I had no idea that the world would become as widespread as it is today. I hope that the technology we exhibited this time, as well as other technologies we are working on now, will be used by many people, spread and permeate the market, and create a world where people take it for granted, while sharing the same vision with others.

Mr. Horiuchi: We live in a world where any number of sounds can spread as long as there is a sound somewhere. Each person has his or her own unique set of sounds that he or she finds noisy or pleasant, and I am always thinking about how I can adapt these ten different sounds to each person’s needs. It is important not only to “emit” sound, but also to “muffle” it, and I hope to create a better sound world by combining the two.

Ishido: I am very much looking forward to the realization of an individually optimized sound world. Thank you very much for your time today.